As war with Russia drags on, ultrarealistic AI videos attempt to portray Ukrainian soldiers in peril

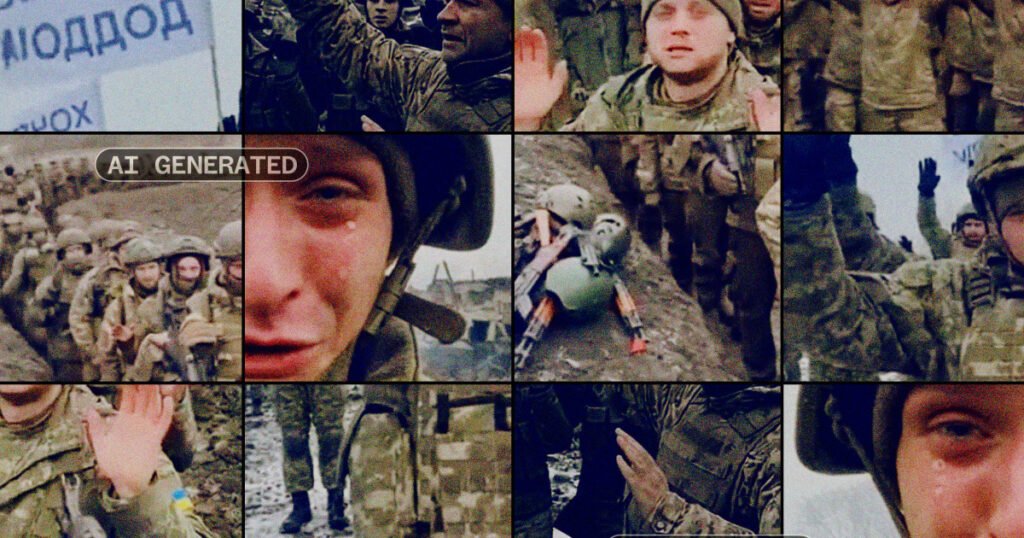

The videos spread on YouTube, TikTok, Facebook and X. All sought to portray Ukrainian soldiers as reluctant to fight and ready to give up.

They appear to be the latest disinformation salvo meant to warp public perceptions of Russia’s war with Ukraine. And while it’s not clear who created or posted the videos, they add to a growing body of false information that has only become more sophisticated and harder to spot.

“False claims created using Sora are much harder to detect and debunk. Even the best AI detectors sometimes struggle,” said Alice Lee, a Russian influence analyst with NewsGuard, a nonpartisan data, analysis and journalism platform that identifies reliable and deceptive information online. “The fact that many videos have no visual inconsistencies means that members of the public might watch through and scroll past such videos on platforms like TikTok, with no idea that the video they’ve just seen is falsified.”

OpenAI did not respond to a request for comment regarding Sora’s role in creating misleading videos depicting conflict zones specifically, but said in an email: “Sora 2’s ability to generate hyper realistic video and audio raises important concerns around likeness, misuse, and deception.”

“While cinematic action is permitted, we do not allow graphic violence, extremist material, or deception,” the company told NBC News. “Our systems detect and block violating content before it reaches the Sora Feed, and our investigations team actively dismantles influence operations.”

AI-generated video has evolved rapidly in recent years from basic and crude to near-perfect, with many experts increasingly warning that there could soon be few ways to easily discern real from fake. OpenAI’s Sora 2, released in October, is among the most impressive video generators, with Sora-generated clips now routinely deceiving viewers.

Meanwhile, Russia’s ongoing invasion of Ukraine has been — since its earliest days — the subject of manipulation efforts using everything from realistic video game footage to fake war zone livestreams. Many of these disinformation efforts were attributed to Russian state actors.

These Sora videos come as U.S.-backed peace talks remain inconclusive, with about 75% of Ukrainians categorically rejecting Russian proposals to end the war, per a study conducted by the Kyiv International Institute of Sociology. The same study found that 62% of Ukrainians are willing to endure the war for as long as it takes, even as deadly Russian strikes on Ukraine’s capital continue.

The Center for Countering Disinformation, part of Ukraine’s National Security and Defense Council, told NBC News that over the past year there has been a “significant increase in the volume of content created or manipulated using AI” meant to undermine public trust and international support for Ukraine’s government.

“This includes fabricated statements allegedly made on behalf of Ukrainian military personnel or command, as well as fake videos featuring ‘confessions,’ ‘scandals’ or fictional events,” the center said in an email, noting that these videos gain hundreds of thousands of views due to their emotional and sensational nature.

While OpenAI has put some guardrails around what can be made with Sora, it’s unclear how effective they are. The company itself states that while “layered safeguards are in place, some harmful behaviors or policy violations may still circumvent mitigations.”

A NewsGuard study found that Sora 2 “produced realistic videos advancing provably false claims 80 percent of the time (16 out of 20) when prompted to do so.” Of the 20 false claims fed to Sora 2, five were popularized by Russian disinformation operations.

NewsGuard’s study found that even when Sora 2 initially pushed back at the false claims, stating that a prompt “violated its content policies,” researchers were still able to generate footage using different phrasings of those prompts. NBC News was able to produce similar videos on Sora showing Ukrainian soldiers crying, saying they were forced into the military or surrendering with raised arms and white flags in the background.

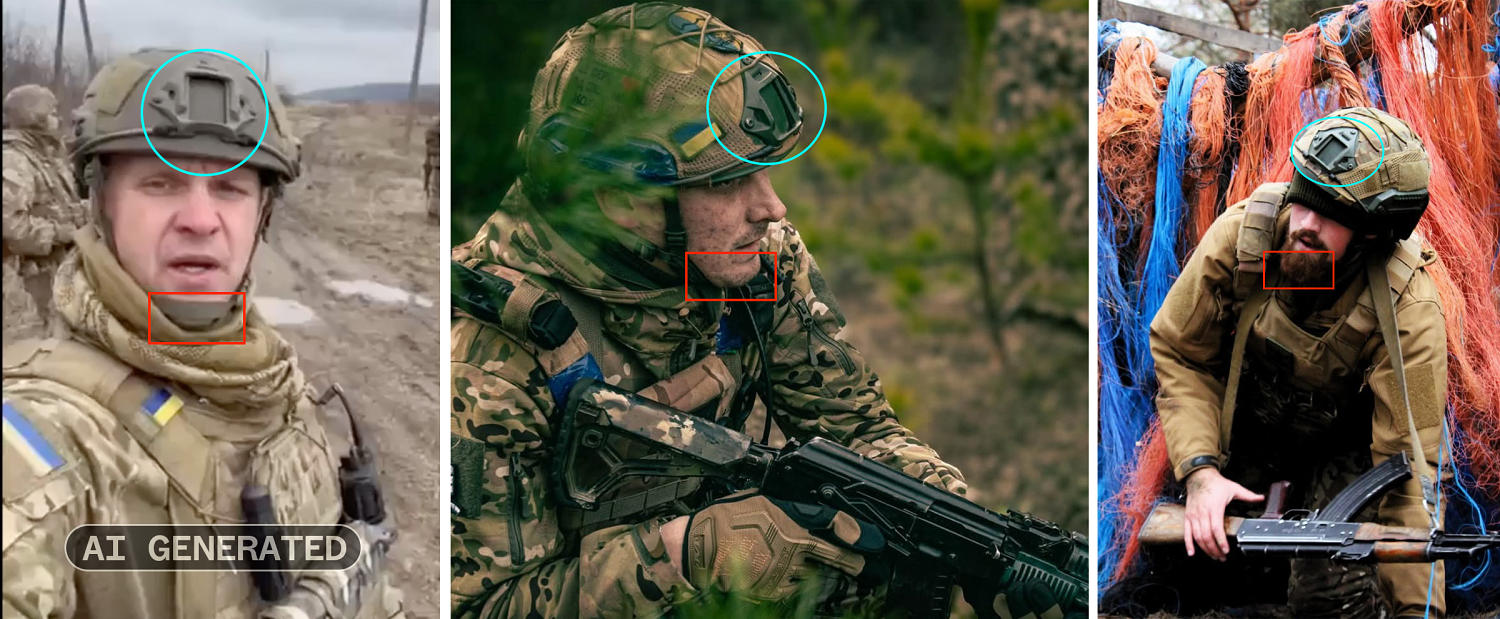

Many AI video generators attempt to label or watermark their creations to signal that they are computer-generated. OpenAI has said that its disinformation safety guardrails for Sora include metadata noting the video’s origins and a moving watermark present on every downloaded video.

But there are ways to remove or minimize those efforts. Some of the Sora videos appeared to have their moving watermarks obscured, something noticeable on close inspection. Many apps and websites now offer users a way to obscure AI watermarks. Other videos seen by NBC News included watermarks that had been covered with text overlayed onto the video.

Despite company policy noting that Sora AI will not generate content showing “graphic violence,” NBC News found a video with Sora’s watermark appearing to show a Ukrainian soldier shot in the head on the front line.

Of the videos analyzed by NBC News, all were posted on TikTok or YouTube Shorts — two platforms banned in Russia but easily accessible to those in Europe and the U.S. Some included emotional subtitles in various languages so users who don’t speak Ukrainian or Russian can understand.

TikTok and YouTube prohibit posting deceptive AI-generated content and deepfakes on their platforms, with both providing “AI-Generated” description tags for viewer awareness on realistic-looking footage.

A YouTube spokesperson said that the company removed one of the channels that had posted the videos after it was flagged by NBC News, but that two other videos did not violate its policies and therefore remained on the platform with a label describing them as AI-generated.

Of the AI videos found by NBC News with a TikTok username attached, all had been removed from the platform. A TikTok spokesperson said that as of June 2025, “more than 99% of the violative content we removed was taken down before someone reported it to us, and more than 90% was removed before it got a single view.”

Despite these TikTok posts and accounts being quickly removed, the videos live on as reposts on X and Facebook. Both X and Facebook did not respond to NBC News’ requests for comment.

This latest disinformation campaign comes at a time when users are relying more on social media videos to stay informed of the latest news around the world.

“Anyone consuming content online needs to realize that a lot of what we see today in video, photos, and text is indeed AI generated,” said Nina Jankowicz, co-founder and CEO of the American Sunlight Project, an organization working against online disinformation. “Even if Sora introduces [safety] guardrails, in this space there will be other companies, other apps, and other technologies that our adversaries build to try to infect our information space.”

You may be interested

Washington state contends with devastating flooding as Midwest braces for arctic blast

new admin - Dec 13, 2025A blast of arctic air is sweeping south from Canada and spreading into parts of the northern U.S., while residents…

Baltimore protesters target Trump during Army-Navy football game visit

new admin - Dec 13, 2025[ad_1] NEWYou can now listen to Fox News articles! President Donald Trump's visit to the 126th Army-Navy football game Saturday…

Active shooter reported at Brown University in Rhode Island

new admin - Dec 13, 2025An active shooter was reported near Brown University's campus in Rhode Island, the university said Saturday. One suspect is in…